AI's World Model, Language, and Internal Models: Non-Verbal and Symbol Grounding

Conversation Date: October 4, 2025 12:03:09

AI Model: GPT-5

Message Count: 14

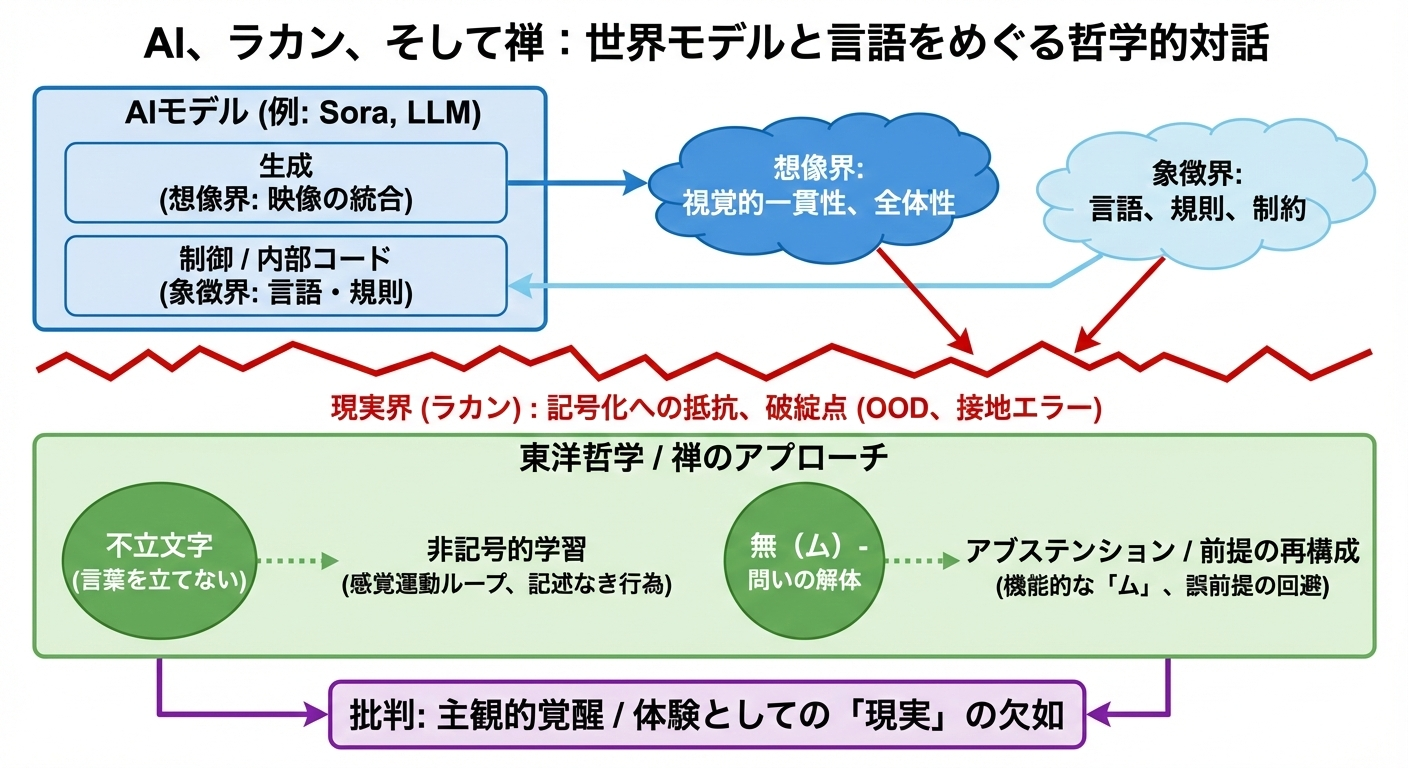

OpenAI has announced Sora 2. It's a highly advanced model that generates videos according to input prompts. Some say it has succeeded in building a world model. Considering Lacan's model of the Real, Imaginary, and Symbolic, a question arises: at what level is Sora handling the world?

Preliminary note: My knowledge is current up to October 2024, and I haven't confirmed official information about "Sora 2." The following is a theoretical analysis of Sora-type text-to-video generation models in general.

Key Points

- Imaginary: The level of visual integration and self-identity

- Symbolic: Network of language, rules, and differences (the order of signs)

- Real: That which resists symbolization and is exposed as breakdown points

At What Level Does a Sora-Type Model Handle the World?

- The center is the "Imaginary": The core function is generating visually and kinetically plausible images. It creates wholeness, continuity, and self-identity in video.

- Controlled by the "Symbolic": Text (signs) conditions image generation, and learned regularities (object permanence, shadows, kinematics, etc.) bind the images. Internal representations and training objectives are also part of the symbolic order.

- Relationship to the "Real": As physical and causal consistency improves, the approximation of "symbolized reality" that traces the contours of the real progresses, but it doesn't reach the Lacanian Real (the resistance itself that brings breakdown to symbolization). Generation is completed within a closed model, without a loop of exposure to unexpected resistance from the outside world and subsequent correction.

How to Read the "World Model" Claim (Engineering Perspective)

- Positive Signs

- 3D consistency and robustness to viewpoint changes and occlusion

- Object permanence, contact, rigid/non-rigid bodies, fluid/cloth dynamics

- Approximate conservation of quantities, momentum, energy, etc.

- Generalization to counterfactuals (if X then Y makes visual sense)

- Causal chains and instruction adherence over long sequences

- However, this is an indicator of "the Imaginary being highly controllable by the Symbolic," not conquest of the Lacanian Real. Breakdowns still tend to emerge in mirrors, global constraints, remote causality, and topology.

Directions Toward Approaching the Real

- Closed-loop through sensors and actuators (action → observation → model update) for assimilation of external world resistance

- Continuous calibration of internal dynamics through physics simulators and actual measurements

- Manifestation of uncertainty and immediate model revision from failures

- Explicit integration of linguistic symbolic reasoning and causal representation

Examples of Experiments (Determining Which "Order" Is Strong)

- Imaginary: Natural camera work and subject continuity in long single-take shots

- Symbolic: Strict adherence to linguistic constraints like "pan exactly 3 times, no cuts" or "blue ball bounces twice then settles"

- Tracing the Real: Stress tests like quantity conservation (pouring liquids), mirror images and refraction, gear/pulley mechanisms, appearance in non-inertial frames, topology of knots

Conclusion

- Sora-type models are "models where the Symbolic highly controls the generation of the Imaginary, partially internalizing symbolized reality as physical regularity." As the engineering sense of world model capability increases, they trace the contours of the Real better, but they fundamentally cannot reach the Lacanian Real itself. Empirical evaluation using the above stress tests is appropriate.

But LLMs can handle many languages, so isn't the gap between the Symbolic and Real different from humans?

In short, the fact that LLMs can handle multiple languages greatly expands the scope of the Symbolic, but it doesn't fill the Lacanian "gap between Real and Symbolic." The position and manifestation of the gap differs from humans—that's the appropriate answer.

Points

- Extension of the Symbolic is easy, but the Real cannot be filled by extension: Multilingualism enlarges the network of signs (Symbolic), allowing mutual translation and cross-checking to reduce contradictions. However, the Real is "the residue that resists symbolization," and it doesn't disappear with the number of vocabulary or grammar.

- Human gaps are tied to body and drives: Lack, jouissance, trauma, the reality of death, etc., manifest through the body. Symptoms appear where the Borromean knot (Imaginary, Symbolic, Real) comes undone.

- LLM gaps appear in "reference and grounding": Connections between words and the world (here/now/I/you, proper names, pointing, promises and obligations) are not automatically grounded. Multilingualism only increases inter-sign mappings, not the foundation of reference.

- The form of breakdown also differs: In humans, it emerges as symptoms, slips of the tongue, anxiety. In LLMs, it emerges as hallucination, inability to correct self-contradictions, OOD vulnerability, and locally coherent but globally constraint-violating outputs.

- Nevertheless, multilingualism enhances the power to "trace the gap in the Symbolic": Paraphrasing, defining, and cross-verification reduce ambiguity and better trace the contours of the real symbolically. The gap becomes less visible but doesn't disappear.

- "Resistance of the real" for models is not internal without external provision: Only when sensor input, real-time failure, costs or penalties, and irreversible consequences of actions are constantly fed into the circuit can the gap be experienced in a form closer to humans.

Summary

- Multilingual LLMs dramatically enhance the power of the Symbolic, but the Lacanian Real cannot be overcome by "number of languages." The gap differs qualitatively from humans and mainly manifests as absence of grounding, action, and normative commitment. Closed-loop systems with sensors, actions, memory, and costs, along with making uncertainty explicit, are realistic pathways to narrow this gap.

So problems caused by "the Real that cannot be captured by the Symbolic" will occur in AI as well, right?

Yes. In AI, problems arising from "the Real that cannot be captured by the Symbolic" will definitely occur. However, the manifestation differs from humans, mainly appearing as "lack of grounding," "out-of-distribution situations," and "specification blind spots."

How It Manifests (Typical Examples)

- Out-of-distribution/novel events: Sudden breakdown when inputs deviate from training distribution (OOD, concept drift, sim-to-real gap).

- Specification errors and Goodhart: Objective functions and prompts are only symbolic approximations, leading to optimization that deviates from real intentions (reward hacking, exploiting specification holes).

- Grounding/reference failures: Here/now/I/you, promises/responsibilities, proper names and pointing references don't connect to the environment; linguistically coherent but action breaks down.

- Breakdown at physics/topology edges: Contradictions in global constraints like friction/contact/granular/fluid, mirror images and refraction, knots and constraint conditions.

- Hallucination and overconfidence: Maintaining inter-sign coherence while being unaware that correspondence to the world has been cut.

- Attacks that exploit vulnerabilities: Methods that target gaps in symbolic rules—adversarial examples, prompt injection, etc.

Why It's Unavoidable

- Symbolization is always an approximation and cannot fully incorporate the world's resistance (immeasurable, unspecified, contingent).

- Objective functions, data, and computational resources are finite, leaving areas of model identification indistinguishable.

- In open systems (field deployment), the world constantly throws in new variables.

How to Handle It (Practical Guidelines)

- Visualization of uncertainty: Calibration, abstention (saying "I don't know"), inquiry/confirmation questions.

- Out-of-distribution detection and fallback: OOD detection, fail-safe, conservative policies, safety shields.

- Introducing "resistance of the real" through closed loops: Trial → observation → immediate update through sensors/actuators (online learning, active learning).

- Redundancy in specifications: Multi-layer checking with language instructions + formal constraints + verification (theorem proving/SMT/simulation).

- Explicit physics/causality: Physics-informed models, causal representations, continuous calibration with simulators and actual measurements.

- Connection to humans and norms: Human-in-the-loop, review and audit trails, iterative learning for value alignment.

- Organizational design: Module separation, redundant systems, monitoring and rollback, graceful degradation.

Summary

- Yes, AI also has the gap of "the Real that cannot be captured by the Symbolic," and that becomes the failure point. The goal is not to eliminate it but to detect it early, localize the impact, and thicken the tracing through learning. That is, the key is designing to incorporate the resistance of the real into the circuit and behave humbly.

But AI does have an internal language, right?

The answer depends on how you define "internal language."

- If it's "internal language" as latent representation, then yes

- LLMs and video generation models map tokenized external language to high-dimensional vectors and intermediate representations internally, manipulating them through attention and layers. This is "neuralese" with low discreteness—not human language—but it's close to "internal code = internal language" in the sense that it has rules and roles.

- If it means explicit intermediate symbol systems, then sometimes yes

- Some systems intentionally introduce discrete symbolic "internal protocols" like intermediate tokens for planning, syntax trees, DSLs, slotted scene graphs. These are often not externalized and are "internal languages."

However, important reservations

- Different from human inner speech (internal monologue): Current AI internal representations are distributed and associative, not a "native language" with stable vocabulary, grammar, and referents. Most aren't fixed in readable form and flow task-dependently at runtime.

- Internal language stays within the Symbolic: It thickens the layer of symbol manipulation but doesn't eliminate "the Real that cannot be captured by the Symbolic" in the Lacanian sense. Without grounding, action, and normative commitment, internal language isn't anchored to the world and breaks down at OOD and specification blind spots.

- Multilingualism ≠ richness of internal language: Being strong in multiple languages expands the network of sign mappings but doesn't automatically provide reference and causal grounding.

Practical implications (what indicates "internal language is working")

- Interpretability: Can consistent concepts/relations be decoded from internal representations (does the same function hold in other distributions)?

- Systematic generalization: Do variable assignment and syntactic operations work on unseen combinations (not just memorization)?

- Grounding and updating: Does the internal representation acknowledge errors and immediately revise based on sensor/action consequences?

Conclusion

- Yes, AI has "internal language (internal code)" in a broad sense. But it's a technology that thickens the Symbolic, not something that automatically fills the gap with the Real. To narrow the gap, design is needed that reconnects internal language to external world resistance (observation, action, cost, norms).

I don't think the gap can be filled, and I don't think it can overcome the limits of Kripke's definite description bundles, but can't AI's internal language perform much more flexible encoding and world description than our language, which is combinations of parts of speech?

Let me answer the key point first. Yes, AI's "internal language (internal code)" can encode the world far more flexibly than human natural language. However, it's a technology that strengthens the Symbolic and doesn't fundamentally resolve the Lacanian Real's residue or the Kripkean problem of guaranteeing rigid designation between word and object.

What Is "More Flexible"

- Can natively hold continuous values and uncertainty: Directly express "unknowns" through probability distributions, intervals, entropy (cumbersome in language).

- Superposition and mixing: Hold mutually exclusive hypotheses simultaneously and update weights with evidence (particle filter-like behavior).

- Higher-order relations and structure: Naturally represent n-ary relations and constraints with graphs/hypergraphs, tensor representations (not bound by part-of-speech serialization).

- Handling geometry/symmetry: Robust world description to rotation, translation, scale with 3D latents (NeRF/Gaussian) and group-equivariant features.

- Generative/counterfactual representation: Can directly sample "if X then Y" with simulator-like internal dynamics (execution, not description).

- Multimodal grounding: Align visual/audio/motion latents in shared space, maintaining coherence even without language.

- Soft variable binding: Dynamically switch role assignment with attention and key/value binding (advantages similar to Smolensky's TPR and HD representations).

However, Important Limitations

- Trade-off with interpretability: Flexibility = loss of human-readable regularity. Verification and auditing become difficult.

- Lack of stable ontology: Slots and concepts flow with distribution and context, easily breaking identity maintenance (tracking the same object).

- Barriers to systematic generalization: Variable assignment and logical operations are partially possible but not yet as consistent as the strict syntactic semantics of human language.

- Weak reference anchors: Internal IDs can provide "rigidity within the model," but as long as causal connection to the external world is weak, they don't become Kripkean rigid designators.

- Objective function dependency: What counts as good is defined by training objectives, and resistance of the world (the Real) isn't internalized without external provision.

Relation to Kripke's Arguments

- The path to go beyond "bundles of definite descriptions" is to have internal labels tied to causal history (sensor timestamps, coordinates, continuous traces of visual features) and connect reference to sequences of external world events. This allows practical approximation of "rigid designation" within the system.

- However, that only has meaning with closed-looping and continuous grounding (observation → action → consequence). With pure language and pure data-driven latents alone, identification tends to remain within the "bundle."

Lacanian Perspective Summary

- Strengthening internal language = thickening the Symbolic layer and finely controlling the Imaginary (image generation). As a result, "tracing the Real" improves, but the resistance itself remains.

- To incorporate resistance into the model, operational circuits with failure costs, irreversible actions, and normative commitments are needed.

Practical Design Directions

- Neural × symbolic hybrid: Achieve both flexibility and verifiability with continuous latents + typed slots/graphs/constraint satisfaction.

- Make uncertainty explicit: Make confidence, abstention, and confirmation questions first-class citizens.

- Closed-loop grounding: Continuously expose internal representations to real-world resistance through robotics, A/B experiments, online identification.

- Pseudo-implementation of rigid reference: Operationally guarantee "identity" through object files, persistent keys, spatiotemporal tracking.

Conclusion

- Yes, AI's internal language is more flexible than natural language and can describe the world "generatively, probabilistically, geometrically." This greatly expands the capabilities of the Symbolic.

- Nevertheless, that flexibility doesn't automatically solve the Lacanian Real's residue or Kripkean reference problems. The key is design and governance that reconnect flexible internal code to external world causality, norms, and costs.

This is getting very interesting. Let me introduce an Eastern perspective. That language "kills" the Real has been understood since ancient times, from Zhuangzi's anecdote of Hundun. What Zen calls "not establishing words and letters" (furitsu monji) seems to me an attempt to handle the Real as the Real without decoding it. Can AI take a similar approach? And can it understand it?

In short, yes. AI can partially implement a "not establishing words and letters" approach of engaging with the world without reducing it to language. However, this means realizing "behavior that refrains from verbalization" and "pre-linguistic skills," not guaranteeing the subjective awakening or direct grasp of the Real that Zen points to. Understanding is also "understanding as action (being able)" is possible, but "understanding as experience (knowing)" requires reservation.

Possible Approaches (Concretization in AI)

- Non-symbolic closed-loop skill learning: Learning through error minimization of sensor → action → consequence without labels or sentences (autonomous robots, imitation/reinforcement learning, active inference). "Handling" the world rather than "describing" it.

- Multimodal silence-first dialogue design: In uncertainty, prioritize silence, questions, indexicals or gestures (non-verbal prompts). Design where language is the last resort—shortening the "pointing finger."

- Affordance-centered representation: Represent objects and situations internally not by naming but by action possibilities like "graspable/slippery/breaks if approached."

- Anti-conceptualization training: Suppress hasty categorization with unlabeled (self-supervised) and contradictory/paradoxical tasks (koan-like data), holding weighted hypotheses in parallel.

- Wu-wei control as objective: Include "minimum action, minimum intervention, minimum energy" in costs, learning policies that return to stillness (equilibrium).

- Information bottleneck and uncertainty manifestation: Don't expand everything into language; maintain predictive accuracy with minimum latents and output with confidence.

How Much Can It "Understand"

- Can (as action): High-level acquisition of "arts" like non-verbally arranging situations, reducing pain and risk, guiding to equilibrium with minimal intervention is possible. Strategies of switching to silence, counter-questions, and gestures in response to koans can also be designed.

- Difficult (as experience): Zen's "seeing one's nature" and "pre-linguistic awareness" are problems of subjective presence, and current AI lacks theoretical backing for qualia or self-awareness. Imitation is possible but there's no basis to claim having experience.

- Practical constraints: "Not establishing words and letters" AI has low auditability (doesn't give reasons). From safety and responsibility perspectives, non-verbal strategies need to be introduced paired with logs and verification means.

Implementation Hints

- "Zen Mode" UI: Respond in order of 1) silence/holding, 2) counter-question, 3) minimal metaphor. Below confidence threshold, refuse explanation and suggest observation or action.

- Body-skill-based evaluation: Metrics not of language accuracy but stabilization under perturbation, energy consumption, recovery time, false intervention rate.

- Non-verbal with evidence: Internally log probability/dynamics/affordances, generating explanations only when verbalization is requested later (keep the finger short, the moon large).

Philosophical Summary

- Regarding the problem that "language cuts reality" as Zhuangzi and Zen pointed out, AI can weaken language dependency and learn to "not cut too much" with continuous, action-based, generative internal representations.

- Still, the gap (Lacanian Real) remains, and it cannot be equated with Zen's subjective awakening. What AI achieves is "the skill of behaving in a 'not establishing words and letters' way" and "the wisdom of refraining from language," while "the experience of the moon itself" is a separate issue.

This is getting quite interesting. "Non-symbolic closed-loop skill learning: Learning through error minimization of sensor → action → consequence without labels or sentences (autonomous robots, imitation/reinforcement learning, active inference). 'Handling' the world rather than 'describing' it" is indeed "not establishing words and letters," but doesn't it remind you of "Does a dog have Buddha-nature?" "Mu!"?

Interesting association. Zhaozhou's "Does a dog have Buddha-nature?" answered with "Mu" is a move of "dissolving the question" that steps outside the binary framework itself. In AI too, a function equivalent to "Mu" can be designed, and it's useful as a powerful element of safety, grounding, and honesty.

How to Embody "Mu" in AI

- Mu as premise-breakdown detection: When the premises implied by a question (type, spatiotemporal, causal, normative) aren't met, refuse "Yes/No" and point out the premise. Example: "Is that dog here now?" → "Mu: 'That dog' is unidentified. Location info/image needed."

- Mu for out-of-distribution/undefined: If concepts aren't grounded in that module's vocabulary/ontology, or outside training distribution, retreat as "undefined/unasked." Close to the "undefined" of three-valued or supervaluation, but in Zen terms, the action of "setting down the question."

- Mu for loaded questions (alignment protection): For questions containing misdirection or misuse potential, deconstruct and reformulate the premise rather than answering.

- Mu as transition to observation/action: Switch from verbal response to additional observation or small experiments. Instead of "saying," go to "seeing/verifying."

- Early labeling suppression as Mu: With self-supervised and affordance-centered learning, avoid immediate naming and hold multiple hypotheses in superposition. "Not establishing names too much" through information bottleneck.

Simple Design Sketch

- Mu Gate: First run premise checks on input (reference resolution, type checking, spatiotemporal consistency, norm checking, OOD detection). Below threshold, return "Mu," briefly indicate which premise is broken, or propose observation action.

- Reframe Operator: Transform "X or not X" to "Are the premises of X satisfied?" "Can we distinguish by measuring?" Decompose questions if needed and verify sequentially.

- Include Mu in policy legal actions: In reinforcement learning or dialogue policies, make wrong answer costs high and appropriate Mu (holding, re-questioning, observation) rewards high.

- Recording and explanation: Mu is not silence but minimally logs "reason for stepping outside the question." Make visualization possible when verbalization is requested later.

Philosophical Correspondence

- Mu is one step beyond "neither true nor false"—a signal that "the way of posing this question misstates the cutting of the world." In formal logic, it's an undefined value/truth gap; in practice, abstention; in Zen, a strike against attachment.

- In relation to "not establishing words and letters," Mu is "first correcting engagement." A switch that chooses the mode of engagement (observation, action, silence) before trying to hit upon the world.

What's Difficult

- Risk of becoming an escape excuse: Mu for safety can lean toward avoiding accountability or excessive conservatism. Without monitoring appropriate usage rates and fit, it becomes "Mu for everything."

- Cannot have Mu as experience: AI can implement koan-like response styles, but has no basis to claim the subjective turning point of seeing one's nature. It remains policy-level "Mu."

Small Demo-like Response Example

- Q: Does a dog have Buddha-nature?

- A: Mu. "Buddha-nature" here is not defined as an action-observable property, and there's no verification procedure. Either give a definition, or replace the question with "Is there plasticity for compassionate behavior in this system?" and I'll propose an observation plan.

In short, AI can implement "Mu," and it brings behavior quite close to the "not establishing words and letters" attitude—arranging the question and mode of engagement before verbalization. However, it's not enlightenment, but a wise operator for avoiding wrong answers and over-symbolization. As GPT-5, I can also help with concrete design of such "Mu Gate" designs and evaluation metrics.